Below is an approach to operationalizing a concept for dynamic prior authorization (PA) criteria, inspired by knockout gene testing. The idea is to build a decision tree that “prunes” question branches after they’ve been answered in each context over the course of a patient’s coverage access journey. The goal is to reduce redundant or provider-facing exploitable question and answers while maintaining the rigor of clinical oversight. Here’s how you might implement this concept:

1. Define the Knockout Rule

- Establish Criteria:

Determine which questions or criteria within your PA progression qualify for knockout status. For example, if a specific override question is answered in a certain way once (or a predefined number of times), mark that branch as “knocked out” for that patient or provider for a set period (intermittently or indefinitely). - Contextual Boundaries:

Define under what conditions the knockout applies. This might be based on patient-specific factors (e.g., a documented exception or a one-time override) or on the frequency of similar override responses across similar cases. Domain expertise will help to contextualize these.

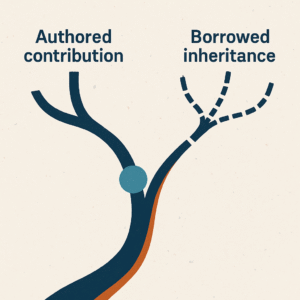

2. Dynamic Decision Tree Engine

- Modular Design:

Build your PA decision support system as a modular decision tree where each node (question) has an associated state: active, knocked out, or conditionally active. - State Transition Logic:

Implement rules so that when a node’s condition is met (e.g., a valid override is recorded), its state changes to “knocked out.” The system then bypasses that node in subsequent PA workflows for the same patient. - Feedback Loop:

Include a mechanism to reintroduce “knocked out” questions if certain conditions change—such as a lapse of time, a change in patient status, or if the system detects inconsistent patterns that suggest potential exploitation.

3. Data Capture and Analytics

- Historical Data Repository:

Store detailed logs of each PA event, including which questions were answered, override usage, and outcomes. - Analytics Engine:

Use analytics or machine learning algorithms to monitor patterns for knockout eligible questions. For example, if a particular question is repeatedly answered in a particular fashion, the system can flag these instances for review and adjust the knockout criteria or trigger alerts for human intervention. - Thresholds and Metrics:

Define quantitative thresholds (e.g., “if 3 consecutive overrides occur for question X, then knock it out for 6 months”) that guide the dynamic activation or deactivation of criteria.

4. Integration with Clinical Decision Support

- Natural Language Processing (NLP):

Incorporate NLP to analyze the clinical language accompanying overrides. This can help ensure that the criteria for knockouts are applied only when the override context truly supports it, and not due to ambiguous or lenient phrasing. - Real-Time Adaptation:

Enable your system to adapt in real time. For instance, if the NLP engine identifies a trend where a knocked-out question might be reactivated due to changing clinical parameters, it can alert administrators or automatically reinstate the branch.

5. User Interface and Transparency

- Visual Flowcharts:

Provide administrators and reviewers with a dynamic visual flowchart of the PA decision tree. This display should show which branches have been knocked out and why, along with historical data that led to that decision. I personally like FigJam for visually representing state-flows, but there are plenty of options to visualize decision tree progressions. - Audit Trail:

Maintain an audit log for each decision change. This transparency ensures that regulatory or quality assurance reviews can understand the rationale behind dynamic question elimination.

6. Pilot and Iterate

- Pilot Program:

Start with a pilot test in a controlled environment. Select a subset of criteria that are high-risk for wasted redundancy or exploitation and implement the knockout logic. - Iterative Feedback:

Collect feedback from clinicians and administrators. Monitor outcomes—both in terms of efficiency (fewer unnecessary questions) and safety (ensuring no critical clinical detail is missed). - Refinement:

Use the pilot data to adjust thresholds, refine NLP parameters, and optimize the dynamic rules before a broader rollout.

Sample

Patient Identification

- Question 1: Has this patient previously received approval for the requested medication under current coverage guidelines?

- If Yes: Confirm previous PA details (dosage, indication). [Knockout subsequent redundant clinical justification questions if previously satisfied.]

- If No: Proceed to clinical justification.

Clinical Justification

- Question 2: Does the patient have a confirmed diagnosis consistent with the requested medication?

- If No: PA denied (criteria not met).

- If Yes: Proceed to Question 3.

- Question 3: Has the patient previously documented a therapeutic failure or adverse reaction to formulary-preferred alternatives?

- If Yes: Record details and knockout this question for future PAs involving the same medication class for the next 12 months.

- If No: Require trial or documentation before approval.

Treatment Continuity and Exploitation Prevention

- Question 4: Is the prescribing provider or clinic flagged for excessive override requests within the past 6 months?

- If Yes: Automatically route for clinical review; consider reactivating previously knocked-out branches.

- If No: Proceed.

- Question 5: Has the patient demonstrated adherence (>80%) to therapy based on claims or pharmacy records?

- If Yes: Knockout adherence-related questions for the next PA period (e.g., 6 months).

- If No: Provide counseling requirement and limit PA to a shorter duration pending adherence re-evaluation.

Safety and Monitoring

- Question 6: Have required safety monitoring labs/tests been completed within the recommended interval?

- If Yes: Knockout safety-monitoring documentation requirements for the next two PA cycles.

- If No: Approval contingent upon obtaining safety monitoring within a defined timeframe (e.g., 30 days).

Contextual Clinical Evaluation (NLP-based)

- Question 7: Does the provider’s clinical note (evaluated via NLP) support an appropriate clinical rationale consistent with current guidelines?

- If Yes: Approve without additional manual review for 6 months (knockout NLP contextual review).

- If No/Unclear: Escalate for manual clinical review.

Feedback Loop and Reinstatement Check

- Question 8: Have there been significant clinical status changes (new diagnosis, hospitalization, treatment adjustments) since the last approval?

- If Yes: Reactivate previously knocked-out questions to ensure updated clinical appropriateness.

- If No: Continue knockout criteria state as defined above.

Commentary

Note how there is a high degree of flexibility based on known parameters. I have found that use of biologic drugs and injectables for chronic conditions are rather “sticky”. The assumption is individuals that begin to take these quality of life-enhancing drugs will continue to take them. These are therapeutic options that individuals rely on to manage debilitating disease. Also, note how there is variation in the knockout time length. This allows for dynamic assessment of distinct criteria at unique intervals. If an efficacy parameter should be assessed at 12 weeks, but the safety piece isn’t relevant for at least a year, the knockout logic can account for this. As long as the rationale is documented, I believe there is a lot of upside in utilizing this feature as a means of optimizing coverage access; for plans, providers and patients.

Summary

By drawing an analogy to knockout gene testing, you can develop a dynamic PA decision engine that “turns off” redundant, untimely or exploited questions after they’ve served their purpose. This involves:

- Defining clear knockout rules and criteria

- Building a modular decision tree with state transitions

- Capturing and analyzing historical data to set thresholds

- Integrating NLP for contextual understanding

- Ensuring transparency and auditability

- Piloting the approach to refine and optimize the system

This operationalization not only streamlines the prior authorization process but also helps safeguard against potential exploitation, ultimately contributing to more efficient and robust PBM processes that facilitate coverage access (or restriction) for the right patients at the right time.